Android How to Upload a File to a Google Cloud Platform Bucket

Exporting information from/to Google Cloud Platform

A guide on how to manage data flows around GCP

I already discussed some functionalities of GCP in another mail, that will guide y'all in automatizing the execution of uncomplicated lawmaking chunks.

One thing that might come up in handy is not simply to execute code on GCP, only also to export the result of that code as a file somewhere where you tin can admission it.

Note that the views expressed here are my own. I am not a GCP certified user, and the proceedings described below are my own doing, inspired from many sources online and time spent tweaking around the cloud…There might be better ways to achieve the goals stated beneath.

In this mail service, I will discuss two unlike approaches that will allow y'all to export the result(s) of your code execution to:

- ane) Cloud Bucket (with an option to Large Query tables): Big query tables volition be useful if yous demand to perform additional operations on GCP, such every bit perform regular ML predictions on certain data tables. They can also be access past third party software such equally Tableau or Power BI if you want to fetch data for visualization.

- 2) Google Bulldoze: Google Bulldoze consign would allow y'all to provide easy and readable admission to many users, if the data is to be shared on a regular basis for further personal use.

- three) FTP server: your arrangement might exist using a FTP server to exchange and/or shop data.

1. Exporting to a GCP saucepan

1) Create GCP Bucket

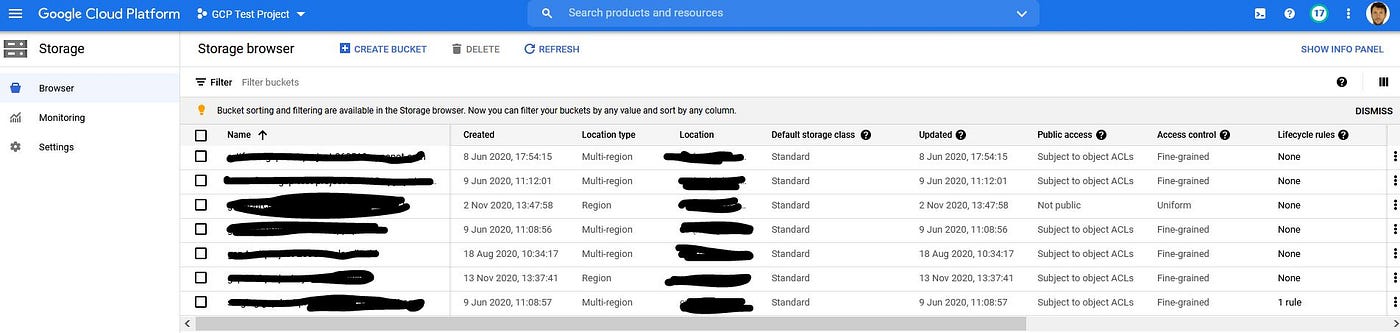

To consign file on Large Query Tables, you should first consign your data on a GCP bucket. The storage folio will display all buckets currently existing and give you the opportunity to create 1.

Go to the Cloud Storage folio, and click on Create a Bucket. Run across documentation to configure different parameters of your saucepan.

Once create, your bucket volition be accessible with a given name, my_test_bucket.

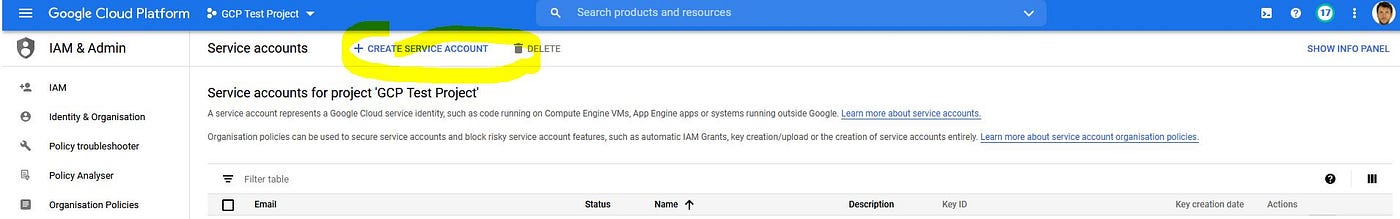

two) Create Service Account Key

I recommend you lot use a service account key to monitor and command the access to your bucket. You can create an service account key here, which volition permit you to link the key to your saucepan. Prior identification using the key volition be required to download and upload files to the bucket

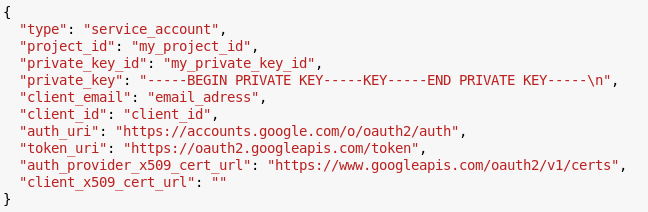

The central will be a .json with the post-obit construction:

3) Enter python code snippet in code file

Once your destination bucket and service account fundamental are created, you lot can proceed with copying the file(southward) in the bucket using python code.

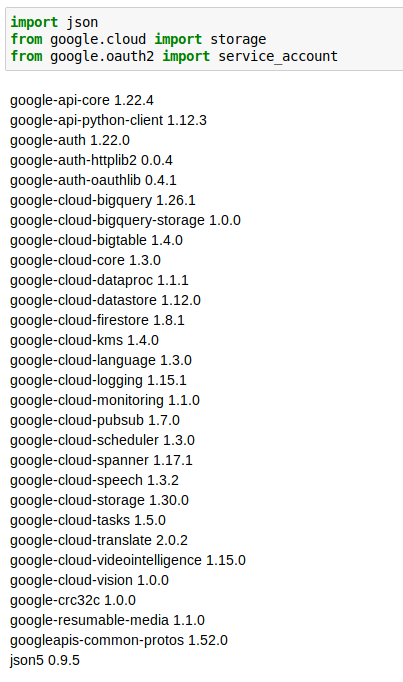

- Outset, we import the required libraries. I used the post-obit versions

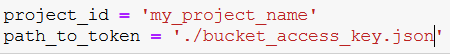

2. We and so define the projection ID nether which you lot are working, too as the path to service account token y'all previously generated.

3. We can now proceed with the identification using the token:

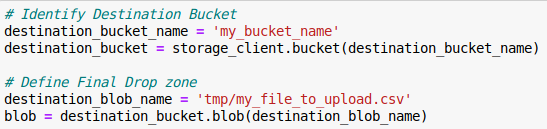

4. Now that nosotros are identified, we need to (i) define the destination bucket (that you created at the beginning of the tutorial) and (ii) define the driblet zone, that is, where the files volition be copied in the bucket. In my case, I copy the file in a subfolder /tmp (in that location are no real sub folders in the bucket, simply it is displayed equally such):

5. Upload file on Bucket

If you at present access your bucket, you lot should see the file copied in a sub folder /tmp. Depending on the rights that your account has been given, you might not be able to create a sub folder inside the saucepan, if that binder is not already present. Similarly, you might not be able to create a file, but simply to edit it. If you get issues in the steps described in a higher place, try to copy in the bucket's root and/or upload a file with the same filename manually. I am not 100% sure these concerns are valid though.

4) Optional: Consign file on BigQuery table

Nosotros just exported the information as a .csv file on a Cloud Bucket. You lot might all the same want to go along the data in a tabular format on the deject. That could be useful if y'all desire to launch a ML pipeline on a table, or connect a third party software (e.g. Tableau) to the information.

Since these steps are well described in the Google Medico, I won't get in details hither. I would notwithstanding recommend, given the low toll of storing information on GCP, to perform this cloud storage of your information, so that you tin can e'er get admission to it and automatize its use.

ii. Exporting on Google Bulldoze

Exporting a file on Google Drive can have quite some advantages, in detail if users in your organization are not familiar with cloud engineering science & interfaces, and adopt to navigate using expert sometime spread sheets. You can so save your data in an .xlsx or .csv and consign the file on a drive to which users take admission.

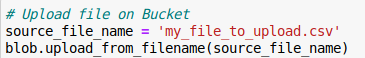

We will be using PyDrive to command the upload and download of files to a Google Drive. You will demand to obtain a client_secrets.json file to link your code to the business relationship you want to copy the file on. Y'all can create that file easily by following the steps described here.

I followed these steps hither to re-create a file on a GDrive. The nice thing with that solution is that the authentication to the Google Account is only required once, which creates a file mycreds.txt. That file is then used in farther authentication processes to bypass transmission hallmark.

Executing this function the commencement time will trigger the opening of a Google Hallmark step in your browser. Upon successful authentication, further execution of the export will utilize the mycreds.txt file to access directly the bulldoze and copy the data.

As you lot may have noticed, this solution can also exist used on other platforms that GCP, or simply locally. To use information technology in GCP, but cosign one time to generate a mycreds.txt file. If yous execute your code manually, then brand sure the file is present in your lawmaking repo. If you use Docker to execute your code regularly and automatically, then make sure to copy that file in your Dockerfile.

That's it!

oh, by the fashion, it is equally simple to fetch information from Google Bulldoze from GCP, using the aforementioned logic. Come across the role below

3. Exporting on an FTP server

Every bit mentioned previously, you might want to consign some data on an FTP server, for further sharing. For case, I had to set a daily export of data for a team of data analyst, that should be delivered on an FTP server to which they had access. The data would exist fetched through an API call, munged and exported on the FTP every 24-hour interval.

I apply pysftp, version 0.ii.nine.

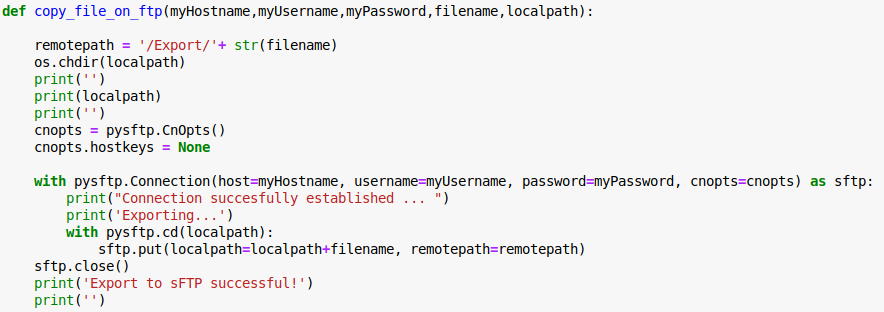

You will simply need the hostname, username, countersign to the FTP server, which should be provided by your organization. You can utilize the office below to send a file filename located in localpath to the FTP server.

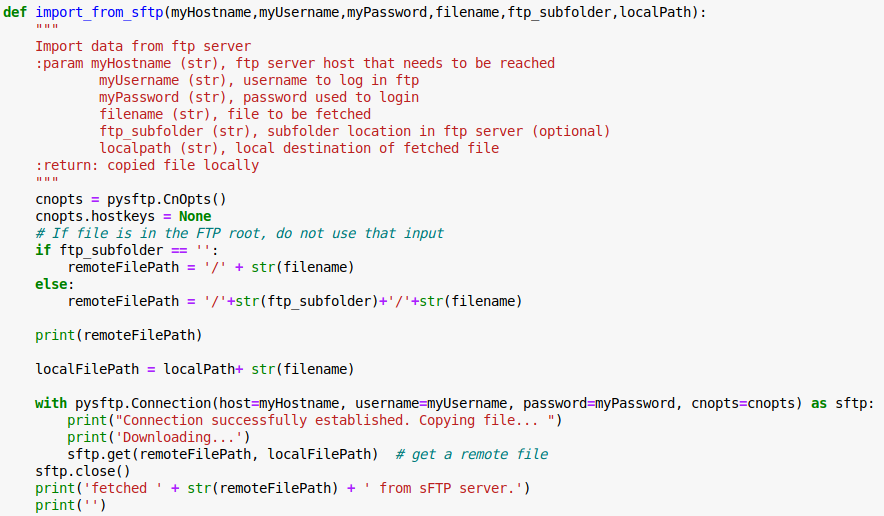

Similarly equally the GDrive case, y'all can use the same library to get file from an FTP server to GCP for further use.

The office below has ane additional parameter, the ftp_subfolder parameter which provides, if the example, the sub folder in which the file of involvement is located.

iv. Concluding words

That's it! These three approaches will allow you lot to implement a few data flows from & to several data hubs that might exist used around your organization. Think that these proceedings are my ain doing, and in that location might be room for improvement! :)

Combining these methods with automatic lawmaking execution should permit yous to deploy simple data processes to make your life easier and your work cleaner ;)

As always, I wait forward to comments & effective criticism!

beattielabody1966.blogspot.com

Source: https://towardsdatascience.com/exporting-data-from-google-cloud-platform-72cbe69de695

0 Response to "Android How to Upload a File to a Google Cloud Platform Bucket"

Post a Comment